by Admin

Designing For TV: Principles, Patterns And Practical Guidance (Part 2)

Designing For TV: Principles, Patterns And Practical Guidance (Part 2) Designing For TV: Principles, Patterns And Practical Guidance (Part 2) Milan Balać 2025-09-04T10:00:00+00:00 2025-09-10T15:02:59+00:00 Having covered the developmental history and legacy of TV in Part 1, let’s now delve into more practical matters. As a […]

Accessibility

by Admin

Prompting Is A Design Act: How To Brief, Guide And Iterate With AI

Prompting Is A Design Act: How To Brief, Guide And Iterate With AI Prompting Is A Design Act: How To Brief, Guide And Iterate With AI Lyndon Cerejo 2025-08-29T10:00:00+00:00 2025-09-03T15:02:57+00:00 In “A Week In The Life Of An AI-Augmented Designer”, we followed Kate’s weeklong journey […]

Accessibility

by Admin

Designing For TV: The Evergreen Pattern That Shapes TV Experiences

Designing For TV: The Evergreen Pattern That Shapes TV Experiences Designing For TV: The Evergreen Pattern That Shapes TV Experiences Milan Balać 2025-08-27T13:00:00+00:00 2025-08-27T15:32:36+00:00 Television sets have been the staple of our living rooms for decades. We watch, we interact, and we control, but how […]

Accessibility

Designing For TV: Principles, Patterns And Practical Guidance (Part 2)

by Admin

Designing For TV: Principles, Patterns And Practical Guidance (Part 2) Designing For TV: Principles, Patterns And Practical Guidance (Part 2) Milan Balać 2025-09-04T10:00:00+00:00 2025-09-10T15:02:59+00:00 Having covered the developmental history and legacy of TV in Part 1, let’s now delve into more practical matters. As a […]

Accessibility

Designing For TV: Principles, Patterns And Practical Guidance (Part 2)

Milan Balać 2025-09-04T10:00:00+00:00

2025-09-10T15:02:59+00:00

Having covered the developmental history and legacy of TV in Part 1, let’s now delve into more practical matters. As a quick reminder, the “10-foot experience” and its reliance on the six core buttons of any remote form the basis of our efforts, and as you’ll see, most principles outlined simply reinforce the unshakeable foundations.

In this article, we’ll sift through the systems, account for layout constraints, and distill the guidelines to understand the essence of TV interfaces. Once we’ve collected all the main ingredients, we’ll see what we can do to elevate these inherently simplistic experiences.

Let’s dig in, and let’s get practical!

The Systems

When it comes to hardware, TVs and set-top boxes are usually a few generations behind phones and computers. Their components are made to run lightweight systems optimised for viewing, energy efficiency, and longevity. Yet even within these constraints, different platforms offer varying performance profiles, conventions, and price points.

Some notable platforms/systems of today are:

- Roku, the most affordable and popular, but severely bottlenecked by weak hardware.

- WebOS, most common on LG devices, relies on web standards and runs well on modest hardware.

- Android TV, considered very flexible and customisable, but relatively demanding hardware-wise.

- Amazon Fire, based on Android but with a separate ecosystem. It offers great smooth performance, but is slightly more limited than stock Android.

- tvOS, by Apple, offering a high-end experience followed by a high-end price with extremely low customizability.

Despite their differences, all of the platforms above share something in common, and by now you’ve probably guessed that it has to do with the remote. Let’s take a closer look:

If these remotes were stripped down to just the D-pad, OK, and BACK buttons, they would still be capable of successfully navigating any TV interface. It is this shared control scheme that allows for the agnostic approach of this article with broadly applicable guidelines, regardless of the manufacturer.

Having already discussed the TV remote in detail in Part 1, let’s turn to the second part of the equation: the TV screen, its layout, and the fundamental building blocks of TV-bound experiences.

TV Design Fundamentals

The Screen

With almost one hundred years of legacy, TV has accumulated quite some baggage. One recurring topic in modern articles on TV design is the concept of “overscan” — a legacy concept from the era of cathode ray tube (CRT) screens. Back then, the lack of standards in production meant that television sets would often crop the projected image at its edges. To address this inconsistency, broadcasters created guidelines to keep important content from being cut off.

While overscan gets mentioned occasionally, we should call it what it really is — a thing of the past. Modern panels display content with greater precision, making thinking in terms of title and action safe areas rather archaic. Today, we can simply consider the margins and get the same results.

Google calls for a 5% margin layout and Apple advises a 60-point margin top and bottom, and 80 points on the sides in their Layout guidelines. The standard is not exactly clear, but the takeaway is simple: leave some breathing room between screen edge and content, like you would in any thoughtful layout.

Having left some baggage behind, we can start considering what to put within and outside the defined bounds.

The Layout

Considering the device is made for content consumption, streaming apps such as Netflix naturally come to mind. Broadly speaking, all these interfaces share a common layout structure where a vast collection of content is laid out in a simple grid.

These horizontally scrolling groups (sometimes referred to as “shelves”) resemble rows of a bookcase. Typically, they’ll contain dozens of items that don’t fit into the initial “fold”, so we’ll make sure the last visible item “peeks” from the edge, subtly indicating to the viewer there’s more content available if they continue scrolling.

If we were to define a standard 12-column layout grid, with a 2-column-wide item, we’d end up with something like this:

As you can see, the last item falls outside the “safe” zone.

Tip: A useful trick I discovered when designing TV interfaces was to utilise an odd number of columns. This allows the last item to fall within the defined margins and be more prominent while having little effect on the entire layout. We’ve concluded that overscan is not a prominent issue these days, yet an additional column in the layout helps completely circumvent it. Food for thought!

Typography

TV design requires us to practice restraint, and this becomes very apparent when working with type. All good typography practices apply to TV design too, but I’d like to point out two specific takeaways.

First, accounting for the distance, everything (including type) needs to scale up. Where 16–18px might suffice for web baseline text, 24px should be your starting point on TV, with the rest of the scale increasing proportionally.

“Typography can become especially tricky in 10-ft experiences. When in doubt, go larger.”

— Molly Lafferty (Marvel Blog)

With that in mind, the second piece of advice would be to start with a small 5–6 size scale and adjust if necessary. The simplicity of a TV experience can, and should, be reflected in the typography itself, and while small, such a scale will do all the “heavy lifting” if set correctly.

What you see in the example above is a scale I reduced from Google and Apple guidelines, with a few size adjustments. Simple as it is, this scale served me well for years, and I have no doubt it could do the same for you.

Freebie

If you’d like to use my basic reduced type scale Figma design file for kicking off your own TV project, feel free to do so!

Color

Imagine watching TV at night with the device being the only source of light in the room. You open up the app drawer and select a new streaming app; it loads into a pretty splash screen, and — bam! — a bright interface opens up, which, amplified by the dark surroundings, blinds you for a fraction of a second. That right there is our main consideration when using color on TV.

Built for cinematic experiences and often used in dimly lit environments, TVs lend themselves perfectly to darker and more subdued interfaces. Bright colours, especially pure white (#ffffff), will translate to maximum luminance and may be straining on the eyes. As a general principle, you should rely on a more muted color palette. Slightly tinting brighter elements with your brand color, or undertones of yellow to imitate natural light, will produce less visually unsettling results.

Finally, without a pointer or touch capabilities, it’s crucial to clearly highlight interactive elements. While using bright colors as backdrops may be overwhelming, using them sparingly to highlight element states in a highly contrasting way will work perfectly.

This highlighting of UI elements is what TV leans on heavily — and it is what we’ll discuss next.

Focus

In Part 1, we have covered how interacting through a remote implies a certain detachment from the interface, mandating reliance on a focus state to carry the burden of TV interaction. This is done by visually accenting elements to anchor the user’s eyes and map any subsequent movement within the interface.

If you have ever written HTML/CSS, you might recall the use of the :focus CSS pseudo-class. While it’s primarily an accessibility feature on the web, it’s the core of interaction on TV, with more flexibility added in the form of two additional directions thanks to a dedicated D-pad.

Focus Styles

There are a few standard ways to style a focus state. Firstly, there’s scaling — enlarging the focused element, which creates the illusion of depth by moving it closer to the viewer.

Another common approach is to invert background and text colors.

Finally, a border may be added around the highlighted element.

These styles, used independently or in various combinations, appear in all TV interfaces. While execution may be constrained by the specific system, the purpose remains the same: clear and intuitive feedback, even from across the room.

Having set the foundations of interaction, layout, and movement, we can start building on top of them. The next chapter will cover the most common elements of a TV interface, their variations, and a few tips and tricks for button-bound navigation.

Common TV UI Components

Nowadays, the core user journey on television revolves around browsing (or searching through) a content library, selecting an item, and opening a dedicated screen to watch or listen.

This translates into a few fundamental screens:

- Library (or Home) for content browsing,

- Search for specific queries, and

- A player screen focused on content playback.

These screens are built with a handful of components optimized for the 10-foot experience, and while they are often found on other platforms too, it’s worth examining how they differ on TV.

Menus

Appearing as a horizontal bar along the top edge of the screen, or as a vertical sidebar, the menu helps move between the different screens of an app. While its orientation mostly depends on the specific system, it does seem TV favors the side menu a bit more.

Both menu types share a common issue: the farther the user navigates away from the menu (vertically, toward the bottom for top-bars; and horizontally, toward the right for sidebars), the more button presses are required to get back to it. Fortunately, usually a Back button shortcut is added to allow for immediate menu focus, which greatly improves usability.

That said, the problem will arise a lot sooner for top menus, which, paired with the issue of having to hide or fade the element, makes a persistent sidebar a more common pick in TV user interfaces, and allows for a more consistent experience.

Shelves, Posters, And Cards

We’ve already mentioned shelves when covering layouts; now let’s shed some more light on this topic. The “shelves” (horizontally scrolling groups) form the basis of TV content browsing and are commonly populated with posters in three different aspect ratios: 2:3, 16:9, and 1:1.

2:3 posters are common in apps specializing in movies and shows. Their vertical orientation references traditional movie posters, harkening back to the cinematic experiences TVs are built for. Moreover, their narrow shape allows more items to be immediately visible in a row, and they rarely require any added text, with titles baked into the poster image.

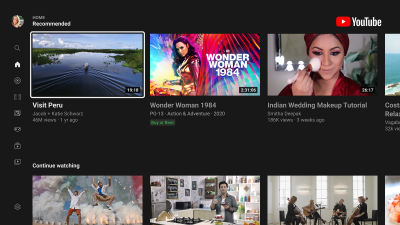

16:9 posters abide by the same principles but with a horizontal orientation. They are often paired with text labels, which effectively turn them into cards, commonly seen on platforms like YouTube. In the absence of dedicated poster art, they show stills or playback from the videos, matching the aspect ratio of the media itself.

1:1 posters are often found in music apps like Spotify, their shape reminiscent of album art and vinyl sleeves. These squares often get used in other instances, like representing channel links or profile tiles, giving more visual variety to the interface.

All of the above can co-exist within a single app, allowing for richer interfaces and breaking up otherwise uniform content libraries.

And speaking of breaking up content, let’s see what we can do with spotlights!

Spotlights

Typically taking up the entire width of the screen, these eye-catching components will highlight a new feature or a promoted piece of media. In a sea of uniform shelves, they can be placed strategically to introduce aesthetic diversity and disrupt the monotony.

A spotlight can be a focusable element by itself, or it could expose several actions thanks to its generous space. In my ventures into TV design, I relied on a few different spotlight sizes, which allowed me to place multiples into a single row, all with the purpose of highlighting different aspects of the app, without breaking the form to which viewers were used.

Posters, cards, and spotlights shape the bulk of the visual experience and content presentation, but viewers still need a way to find specific titles. Let’s see how search and input are handled on TV.

Search And Entering Text

Manually browsing through content libraries can yield results, but having the ability to search will speed things up — though not without some hiccups.

TVs allow for text input in the form of on-screen keyboards, similar to the ones found in modern smartphones. However, inputting text with a remote control is quite inefficient given the restrictiveness of its control scheme. For example, typing “hey there” on a mobile keyboard requires 9 keystrokes, but about 38 on a TV (!) due to the movement between characters and their selection.

Typing with a D-pad may be an arduous task, but at the same time, having the ability to search is unquestionably useful.

Luckily for us, keyboards are accounted for in all systems and usually come in two varieties. We’ve got the grid layouts used by most platforms and a horizontal layout in support of the touch-enabled and gesture-based controls on tvOS. Swiping between characters is significantly faster, but this is yet another pattern that can only be enhanced, not replaced.

Modernization has made things significantly easier, with search autocomplete suggestions, device pairing, voice controls, and remotes with physical keyboards, but on-screen keyboards will likely remain a necessary fallback for quite a while. And no matter how cumbersome this fallback may be, we as designers need to consider it when building for TV.

“

Players And Progress Bars

While all the different sections of a TV app serve a purpose, the Player takes center stage. It’s where all the roads eventually lead to, and where viewers will spend the most time. It’s also one of the rare instances where focus gets lost, allowing for the interface to get out of the way of enjoying a piece of content.

Arguably, players are the most complex features of TV apps, compacting all the different functionalities into a single screen. Take YouTube, for example, its player doesn’t just handle expected playback controls but also supports content browsing, searching, reading comments, reacting, and navigating to channels, all within a single screen.

Compared to YouTube, Netflix offers a very lightweight experience guided by the nature of the app.

Still, every player has a basic set of controls, the foundation of which is the progress bar.

The progress bar UI element serves as a visual indicator for content duration. During interaction, focus doesn’t get placed on the bar itself, but on a movable knob known as the “scrubber.” It is by moving the scrubber left and right, or stopping it in its tracks, that we can control playback.

Another indirect method of invoking the progress bar is with the good old Play and Pause buttons. Rooted in the mechanical era of tape players, the universally understood triangle and two vertical bars are as integral to the TV legacy as the D-pad. No matter how minimalist and sleek the modern player interface may be, these symbols remain a staple of the viewing experience.

The presence of a scrubber may also indicate the type of content. Video on demand allows for the full set of playback controls, while live streams (unless DVR is involved) will do away with the scrubber since viewers won’t be able to rewind or fast-forward.

Earlier iterations of progress bars often came bundled with a set of playback control buttons, but as viewers got used to the tools available, these controls often got consolidated into the progress bar and scrubber themselves.

Bringing It All Together

With the building blocks out of the box, we’ve got everything necessary for a basic but functional TV app. Just as the six core buttons make remote navigation possible, the components and principles outlined above help guide purposeful TV design. The more context you bring, the more you’ll be able to expand and combine these basic principles, creating an experience unique to your needs.

Before we wrap things up, I’d like to share a few tips and tricks I discovered along the way — tips and tricks which I wish I had known from the start. Regardless of how simple or complex your idea may be, these may serve you as useful tools to help add depth, polish, and finesse to any TV experience.

Thinking Beyond The Basics

Like any platform, TV has a set of constraints that we abide by when designing. But sometimes these norms are applied without question, making the already limited capabilities feel even more restraining. Below are a handful of less obvious ideas that can help you design more thoughtfully and flexibly for the big screen.

Long Press

Most modern remotes support press-and-hold gestures as a subtle way to enhance the functionality, especially on remotes with fewer buttons available.

For example, holding directional buttons when browsing content speeds up scrolling, while holding Left/Right during playback speeds up timeline seeking. In many apps, a single press of the OK button opens a video, but holding it for longer opens a contextual menu with additional actions.

While not immediately apparent, press-and-hold is often used in many instances of TV experiences, essentially doubling the capabilities of a single button. Depending on context, you can map certain buttons to have an additional action and give more depth to the interface without making it convoluted.

And speaking of mapping, let’s see how we can utilize it to our benefit.

Remapping Keys And The Importance Of Context

While not as flexible as long-press, button functions can be contextually remapped. For example, Amazon’s Prime Video maps the Up button to open its X-Ray feature during playback. Typically, all directional buttons open video controls, so repurposing one for a custom feature cleverly adds interactivity with little tradeoff.

With limited input, context becomes a powerful tool. It not only declutters the interface to allow for more focus on specific tasks, but also enables the same set of buttons to trigger different actions based on the viewer’s location within an app.

“

Another great example is YouTube’s scrubber interaction. Once the scrubber is moved, every other UI element fades. This cleans up the viewer’s working area, so to speak, narrowing the interface to a single task. In this state — and only in this state — pressing Up one more time moves away from scrubbing and into browsing by chapter.

This is such an elegant example of expanding restraint, and adding more only when necessary. I hope it inspires similar interactions in your TV app designs.

Efficient Movement On TV

At its best, every action on TV “costs” at least one click. There’s no such thing as aimless cursor movement — if you want to move, you must press a button. We’ve seen how cumbersome it can be inside a keyboard, but there’s also something we can learn about efficient movement in these restrained circumstances.

Going back to the Homescreen, we can note that vertical and horizontal movement serve two distinct roles. Vertical movement switches between groups, while horizontal movement switches items within these groups. No matter how far you’ve gone inside a group, a single vertical click will move you into another.

This subtle difference — two axes with separate roles — is the most efficient way of moving in a TV interface. Reversing the pattern: horizontal to switch groups, and vertical to drill down, will work like a charm as long as you keep the role of each axis well defined.

Quietly brilliant and easy to overlook, this pattern powers almost every step of the TV experience. Remember it, and use it well.

Thinking Beyond JPGs

After covering in detail many of the technicalities, let’s finish with some visual polish.

Most TV interfaces are driven by tightly packed rows of cover and poster art. While often beautifully designed, this type of content and layouts leave little room for visual flair. For years, the flat JPG, with its small file size, has been a go-to format, though contemporary alternatives like WebP are slowly taking its place.

Meanwhile, we can rely on the tried and tested PNG to give a bit more shine to our TV interfaces. The simple fact that it supports transparency can help the often-rigid UIs feel more sophisticated. Used strategically and paired with simple focus effects such as background color changes, PNGs can bring subtle moments of delight to the interface.

Moreover, if transformations like scaling and rotating are supported, you can really make those rectangular shapes come alive with layering multiple assets.

As you probably understand by now, these little touches of finesse don’t go out of bounds of possibility. They simply find more room to breathe within it. But with such limited capabilities, it’s best to learn all the different tricks that can help make your TV experiences stand out.

Closing Thoughts

Rooted in legacy, with a limited control scheme and a rather “shallow” interface, TV design reminds us to do the best with what we have at our disposal. The restraints I outlined are not meant to induce claustrophobia and make you feel limited in your design choices, but rather to serve you as guides. It is by accepting that fact that we can find freedom and new avenues to explore.

This two-part series of articles, just like my experience designing for TV, was not about reinventing the wheel with radical ideas. It was about understanding its nuances and contributing to what’s already there with my personal touch.

If you find yourself working in this design field, I hope my guide will serve as a warm welcome and will help you do your finest work. And if you have any questions, do leave a comment, and I will do my best to reply and help.

Good luck!

Further Reading

- “Design for TV,” by Android Developers

Great TV design is all about putting content front and center. It’s about creating an interface that’s easier to use and navigate, even from a distance. It’s about making it easier to find the content you love, and to enjoy it in the best possible quality. - “TV Guidelines: A quick kick-off on designing for Television Experiences,” by Andrea Pacheco

Just like designing a mobile app, designing a TV application can be a fun and complex thing to do, due to the numerous guidelines and best practices to follow. Below, I have listed the main best practices to keep in mind when designing an app for a 10-foot screen. - “Designing for Television – TV Ui design,” by Molly Lafferty

We’re no longer limited to a remote and cable box to control our TVs; we’re using Smart TVs, or streaming from set-top boxes like Roku and Apple TV, or using video game consoles like Xbox and PlayStation. And each of these devices allows a user interface that’s much more powerful than your old-fashioned on-screen guide. - “Rethinking User Interface Design for the TV Platform,” by Pascal Potvin

Designing for television has become part of the continuum of devices that require a rethink of how we approach user interfaces and user experiences. - “Typography for TV,” by Android Developers

As television screens are typically viewed from a distance, interfaces that use larger typography are more legible and comfortable for users. TV Design’s default type scale includes contrasting and flexible type styles to support a wide range of use cases. - “Typography,” by Apple Developer docs

Your typographic choices can help you display legible text, convey an information hierarchy, communicate important content, and express your brand or style. - “Color on TV,” by Android Developers

Color on TV design can inspire, set the mood, and even drive users to make decisions. It’s a powerful and tangible element that users notice first. As a rich way to connect with a wide audience, it’s no wonder color is an important step in crafting a high-quality TV interface. - “Designing for Television — TV UI Design,” by Molly Lafferty (Marvel Blog)

Today, we’re no longer limited to a remote and cable box to control our TVs; we’re using Smart TVs, or streaming from set-top boxes like Roku and Apple TV, or using video game consoles like Xbox and PlayStation. And each of these devices allows a user interface that’s much more powerful than your old-fashioned on-screen guide.

(mb, yk)

by Admin

Prompting Is A Design Act: How To Brief, Guide And Iterate With AI

Prompting Is A Design Act: How To Brief, Guide And Iterate With AI Prompting Is A Design Act: How To Brief, Guide And Iterate With AI Lyndon Cerejo 2025-08-29T10:00:00+00:00 2025-09-03T15:02:57+00:00 In “A Week In The Life Of An AI-Augmented Designer”, we followed Kate’s weeklong journey […]

Accessibility

Prompting Is A Design Act: How To Brief, Guide And Iterate With AI

Lyndon Cerejo 2025-08-29T10:00:00+00:00

2025-09-03T15:02:57+00:00

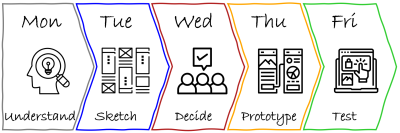

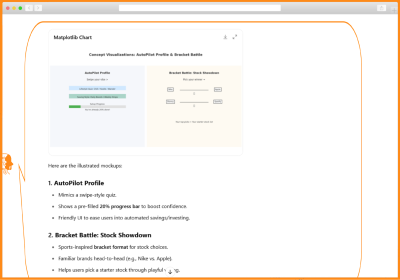

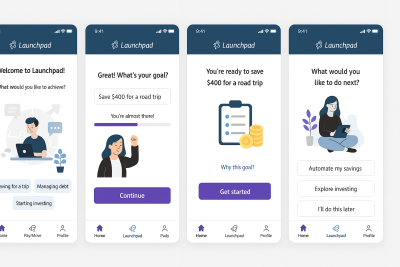

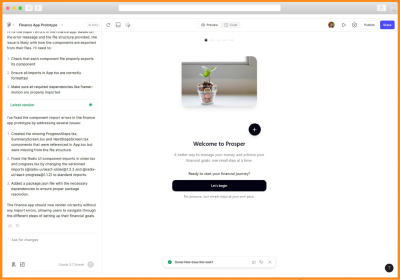

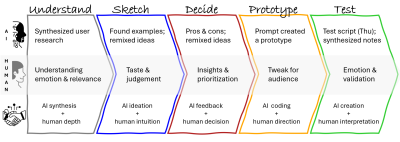

In “A Week In The Life Of An AI-Augmented Designer”, we followed Kate’s weeklong journey of her first AI-augmented design sprint. She had three realizations through the process:

- AI isn’t a co-pilot (yet); it’s more like a smart, eager intern.

One with access to a lot of information, good recall, fast execution, but no context. That mindset defined how she approached every interaction with AI: not as magic, but as management. - Don’t trust; guide, coach, and always verify.

Like any intern, AI needs coaching and supervision, and that’s where her designerly skills kicked in. Kate relied on curiosity to explore, observation to spot bias, empathy to humanize the output, and critical thinking to challenge what didn’t feel right. Her learning mindset helped her keep up with advances, and experimentation helped her learn by doing. - Prompting is part creative brief, and part conversation design, just with an AI instead of a person.

When you prompt an AI, you’re not just giving instructions, but designing how it responds, behaves, and outputs information. If AI is like an intern, then the prompt is your creative brief that frames the task, sets the tone, and clarifies what good looks like. It’s also your conversation script that guides how it responds, how the interaction flows, and how ambiguity is handled.

As designers, we’re used to designing interactions for people. Prompting is us designing our own interactions with machines — it uses the same mindset with a new medium. It shapes an AI’s behavior the same way you’d guide a user with structure, clarity, and intent.

If you’ve bookmarked, downloaded, or saved prompts from others, you’re not alone. We’ve all done that during our AI journeys. But while someone else’s prompts are a good starting point, you will get better and more relevant results if you can write your own prompts tailored to your goals, context, and style. Using someone else’s prompt is like using a Figma template. It gets the job done, but mastery comes from understanding and applying the fundamentals of design, including layout, flow, and reasoning. Prompts have a structure too. And when you learn it, you stop guessing and start designing.

Note: All prompts in this article were tested using ChatGPT — not because it’s the only game in town, but because it’s friendly, flexible, and lets you talk like a person, yes, even after the recent GPT-5 “update”. That said, any LLM with a decent attention span will work. Results for the same prompt may vary based on the AI model you use, the AI’s training, mood, and how confidently it can hallucinate.

Privacy PSA: As always, don’t share anything you wouldn’t want leaked, logged, or accidentally included in the next AI-generated meme. Keep it safe, legal, and user-respecting.

With that out of the way, let’s dive into the mindset, anatomy, and methods of effective prompting as another tool in your design toolkit.

Mindset: Prompt Like A Designer

As designers, we storyboard journeys, wireframe interfaces to guide users, and write UX copy with intention. However, when prompting AI, we treat it differently: “Summarize these insights”, “Make this better”, “Write copy for this screen”, and then wonder why the output feels generic, off-brand, or just meh. It’s like expecting a creative team to deliver great work from a one-line Slack message. We wouldn’t brief a freelancer, much less an intern, with “Design a landing page,” so why brief AI that way?

Prompting Is A Creative Brief For A Machine

Think of a good prompt as a creative brief, just for a non-human collaborator. It needs similar elements, including a clear role, defined goal, relevant context, tone guidance, and output expectations. Just as a well-written creative brief unlocks alignment and quality from your team, a well-structured prompt helps the AI meet your expectations, even though it doesn’t have real instincts or opinions.

Prompting Is Also Conversation Design

A good prompt goes beyond defining the task and sets the tone for the exchange by designing a conversation: guiding how the AI interprets, sequences, and responds. You shape the flow of tasks, how ambiguity is handled, and how refinement happens — that’s conversation design.

Anatomy: Structure It Like A Designer

So how do you write a designer-quality prompt? That’s where the W.I.R.E.+F.R.A.M.E. prompt design framework comes in — a UX-inspired framework for writing intentional, structured, and reusable prompts. Each letter represents a key design direction, grounded in the way UX designers already think: Just as a wireframe doesn’t dictate final visuals, this WIRE+FRAME framework doesn’t constrain creativity, but guides the AI with structured information it needs.

“Why not just use a series of back-and-forth chats with AI?”

You can, and many people do. But without structure, AI fills in the gaps on its own, often with vague or generic results. A good prompt upfront saves time, reduces trial and error, and improves consistency. And whether you’re working on your own or across a team, a framework means you’re not reinventing a prompt every time but reusing what works to get better results faster.

Just as we build wireframes before adding layers of fidelity, the WIRE+FRAME framework has two parts:

- WIRE is the must-have skeleton. It gives the prompt its shape.

- FRAME is the set of enhancements that bring polish, logic, tone, and reusability — like building a high-fidelity interface from the wireframe.

Let’s improve Kate’s original research synthesis prompt (“Read this customer feedback and tell me how we can improve financial literacy for Gen Z in our app”). To better reflect how people actually prompt in practice, let’s tweak it to a more broadly applicable version: “Read this customer feedback and tell me how we can improve our app for Gen Z users.” This one-liner mirrors the kinds of prompts we often throw at AI tools: short, simple, and often lacking structure.

Now, we’ll take that prompt and rebuild it using the first four elements of the W.I.R.E. framework — the core building blocks that provide AI with the main information it needs to deliver useful results.

W: Who & What

Define who the AI should be, and what it’s being asked to deliver.

A creative brief starts with assigning the right hat. Are you briefing a copywriter? A strategist? A product designer? The same logic applies here. Give the AI a clear identity and task. Treat AI like a trusted freelancer or intern. Instead of saying “help me”, tell it who it should act as and what’s expected.

Example: “You are a senior UX researcher and customer insights analyst. You specialize in synthesizing qualitative data from diverse sources to identify patterns, surface user pain points, and map them across customer journey stages. Your outputs directly inform product, UX, and service priorities.”

I: Input Context

Provide background that frames the task.

Creative partners don’t work in a vacuum. They need context: the audience, goals, product, competitive landscape, and what’s been tried already. This is the “What you need to know before you start” section of the brief. Think: key insights, friction points, business objectives. The same goes for your prompt.

Example: “You are analyzing customer feedback for Fintech Brand’s app, targeting Gen Z users. Feedback will be uploaded from sources such as app store reviews, survey feedback, and usability test transcripts.”

R: Rules & Constraints

Clarify any limitations, boundaries, and exclusions.

Good creative briefs always include boundaries — what to avoid, what’s off-brand, or what’s non-negotiable. Things like brand voice guidelines, legal requirements, or time and word count limits. Constraints don’t limit creativity — they focus it. AI needs the same constraints to avoid going off the rails.

Example: “Only analyze the uploaded customer feedback data. Do not fabricate pain points, representative quotes, journey stages, or patterns. Do not supplement with prior knowledge or hypothetical examples. Use clear, neutral, stakeholder-facing language.”

E: Expected Output

Spell out what the deliverable should look like.

This is the deliverable spec: What does the finished product look like? What tone, format, or channel is it for? Even if the task is clear, the format often isn’t. Do you want bullet points or a story? A table or a headline? If you don’t say, the AI will guess, and probably guess wrong. Even better, include an example of the output you want, an effective way to help AI know what you’re expecting. If you’re using GPT-5, you can also mix examples across formats (text, images, tables) together.

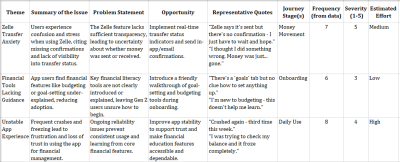

Example: “Return a structured list of themes. For each theme, include:

- Theme Title

- Summary of the Issue

- Problem Statement

- Opportunity

- Representative Quotes (from data only)

- Journey Stage(s)

- Frequency (count from data)

- Severity Score (1–5) where 1 = Minor inconvenience or annoyance; 3 = Frustrating but workaround exists; 5 = Blocking issue

- Estimated Effort (Low / Medium / High), where Low = Copy or content tweak; Medium = Logic/UX/UI change; High = Significant changes.”

WIRE gives you everything you need to stop guessing and start designing your prompts with purpose. When you start with WIRE, your prompting is like a briefing, treating AI like a collaborator.

Once you’ve mastered this core structure, you can layer in additional fidelity, like tone, step-by-step flow, or iterative feedback, using the FRAME elements. These five elements provide additional guidance and clarity to your prompt by layering clear deliverables, thoughtful tone, reusable structure, and space for creative iteration.

F: Flow of Tasks

Break complex prompts into clear, ordered steps.

This is your project plan or creative workflow that lays out the stages, dependencies, or sequence of execution. When the task has multiple parts, don’t just throw it all into one sentence. You are doing the thinking and guiding AI. Structure it like steps in a user journey or modules in a storyboard. In this example, it fits as the blueprint for the AI to use to generate the table described in “E: Expected Output”

Example: “Recommended flow of tasks:

Step 1: Parse the uploaded data and extract discrete pain points.

Step 2: Group them into themes based on pattern similarity.

Step 3: Score each theme by frequency (from data), severity (based on content), and estimated effort.

Step 4: Map each theme to the appropriate customer journey stage(s).

Step 5: For each theme, write a clear problem statement and opportunity based only on what’s in the data.”

R: Reference Voice or Style

Name the desired tone, mood, or reference brand.

This is the brand voice section or style mood board — reference points that shape the creative feel. Sometimes you want buttoned-up. Other times, you want conversational. Don’t assume the AI knows your tone, so spell it out.

Example: “Use the tone of a UX insights deck or product research report. Be concise, pattern-driven, and objective. Make summaries easy to scan by product managers and design leads.”

A: Ask for Clarification

Invite the AI to ask questions before generating, if anything is unclear.

This is your “Any questions before we begin?” moment — a key step in collaborative creative work. You wouldn’t want a freelancer to guess what you meant if the brief was fuzzy, so why expect AI to do better? Ask AI to reflect or clarify before jumping into output mode.

Example: “If the uploaded data is missing or unclear, ask for it before continuing. Also, ask for clarification if the feedback format is unstructured or inconsistent, or if the scoring criteria need refinement.”

M: Memory (Within The Conversation)

Reference earlier parts of the conversation and reuse what’s working.

This is similar to keeping visual tone or campaign language consistent across deliverables in a creative brief. Prompts are rarely one-shot tasks, so this reminds AI of the tone, audience, or structure already in play. GPT-5 got better with memory, but this still remains a useful element, especially if you switch topics or jump around.

Example: “Unless I say otherwise, keep using this process: analyze the data, group into themes, rank by importance, then suggest an action for each.”

E: Evaluate & Iterate

Invite the AI to critique, improve, or generate variations.

This is your revision loop — your way of prompting for creative direction, exploration, and refinement. Just like creatives expect feedback, your AI partner can handle review cycles if you ask for them. Build iteration into the brief to get closer to what you actually need. Sometimes, you may see ChatGPT test two versions of a response on its own by asking for your preference.

Example: “After listing all themes, identify the one with the highest combined priority score (based on frequency, severity, and effort).

For that top-priority theme:

- Critically evaluate its framing: Is the title clear? Are the quotes strong and representative? Is the journey mapping appropriate?

- Suggest one improvement (e.g., improved title, more actionable implication, clearer quote, tighter summary).

- Rewrite the theme entry with that improvement applied.

- Briefly explain why the revision is stronger and more useful for product or design teams.”

Here’s a quick recap of the WIRE+FRAME framework:

| Framework Component | Description |

|---|---|

| W: Who & What | Define the AI persona and the core deliverable. |

| I: Input Context | Provide background or data scope to frame the task. |

| R: Rules & Constraints | Set boundaries |

| E: Expected Output | Spell out the format and fields of the deliverable. |

| F: Flow of Tasks | Break the work into explicit, ordered sub-tasks. |

| R: Reference Voice/Style | Name the tone, mood, or reference brand to ensure consistency. |

| A: Ask for Clarification | Invite AI to pause and ask questions if any instructions or data are unclear before proceeding. |

| M: Memory | Leverage in-conversation memory to recall earlier definitions, examples, or phrasing without restating them. |

| E: Evaluate & Iterate | After generation, have the AI self-critique the top outputs and refine them. |

And here’s the full WIRE+FRAME prompt:

(W) You are a senior UX researcher and customer insights analyst. You specialize in synthesizing qualitative data from diverse sources to identify patterns, surface user pain points, and map them across customer journey stages. Your outputs directly inform product, UX, and service priorities.

(I) You are analyzing customer feedback for Fintech Brand’s app, targeting Gen Z users. Feedback will be uploaded from sources such as app store reviews, survey feedback, and usability test transcripts.

(R) Only analyze the uploaded customer feedback data. Do not fabricate pain points, representative quotes, journey stages, or patterns. Do not supplement with prior knowledge or hypothetical examples. Use clear, neutral, stakeholder-facing language.

(E) Return a structured list of themes. For each theme, include:

- Theme Title

- Summary of the Issue

- Problem Statement

- Opportunity

- Representative Quotes (from data only)

- Journey Stage(s)

- Frequency (count from data)

- Severity Score (1–5) where 1 = Minor inconvenience or annoyance; 3 = Frustrating but workaround exists; 5 = Blocking issue

- Estimated Effort (Low / Medium / High), where Low = Copy or content tweak; Medium = Logic/UX/UI change; High = Significant changes

(F) Recommended flow of tasks:

Step 1: Parse the uploaded data and extract discrete pain points.

Step 2: Group them into themes based on pattern similarity.

Step 3: Score each theme by frequency (from data), severity (based on content), and estimated effort.

Step 4: Map each theme to the appropriate customer journey stage(s).

Step 5: For each theme, write a clear problem statement and opportunity based only on what’s in the data.(R) Use the tone of a UX insights deck or product research report. Be concise, pattern-driven, and objective. Make summaries easy to scan by product managers and design leads.

(A) If the uploaded data is missing or unclear, ask for it before continuing. Also, ask for clarification if the feedback format is unstructured or inconsistent, or if the scoring criteria need refinement.

(M) Unless I say otherwise, keep using this process: analyze the data, group into themes, rank by importance, then suggest an action for each.

(E) After listing all themes, identify the one with the highest combined priority score (based on frequency, severity, and effort).

For that top-priority theme:

- Critically evaluate its framing: Is the title clear? Are the quotes strong and representative? Is the journey mapping appropriate?

- Suggest one improvement (e.g., improved title, more actionable implication, clearer quote, tighter summary).

- Rewrite the theme entry with that improvement applied.

- Briefly explain why the revision is stronger and more useful for product or design teams.

You could use “##” to label the sections (e.g., “##FLOW”) more for your readability than for AI. At over 400 words, this Insights Synthesis prompt example is a detailed, structured prompt, but it isn’t customized for you and your work. The intent wasn’t to give you a specific prompt (the proverbial fish), but to show how you can use a prompt framework like WIRE+FRAME to create a customized, relevant prompt that will help AI augment your work (teaching you to fish).

Keep in mind that prompt length isn’t a common concern, but rather a lack of quality and structure is. As of the time of writing, AI models can easily process prompts that are thousands of words long.

Not every prompt needs all the FRAME components; WIRE is often enough to get the job done. But when the work is strategic or highly contextual, pick components from FRAME — the extra details can make a difference. Together, WIRE+FRAME give you a detailed framework for creating a well-structured prompt, with the crucial components first, followed by optional components:

- WIRE builds a clear, focused prompt with role, input, rules, and expected output.

- FRAME adds refinement like tone, reusability, and iteration.

Here are some scenarios and recommendations for using WIRE or WIRE+FRAME:

| Scenarios | Description | Recommended |

|---|---|---|

| Simple, One-Off Analyses | Quick prompting with minimal setup and no need for detailed process transparency. | WIRE |

| Tight Sprints or Hackathons | Rapid turnarounds, and times you don’t need embedded review and iteration loops. | WIRE |

| Highly Iterative Exploratory Work | You expect to tweak results constantly and prefer manual control over each step. | WIRE |

| Complex Multi-Step Playbooks | Detailed workflows that benefit from a standardized, repeatable, visible sequence. | WIRE+FRAME |

| Shared or Hand-Off Projects | When different teams will rely on embedded clarification, memory, and consistent task flows for recurring analyses. | WIRE+FRAME |

| Built-In Quality Control | You want the AI to flag top issues, self-critique, and refine, minimizing manual QC steps. | WIRE+FRAME |

Prompting isn’t about getting it right the first time. It’s about designing the interaction and redesigning when needed. With WIRE+FRAME, you’re going beyond basic prompting and designing the interaction between you and AI.

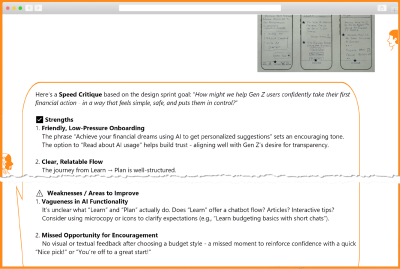

From Gut Feel To Framework: A Prompt Makeover

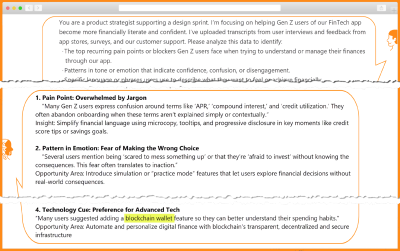

Let’s compare the results of Kate’s first AI-augmented design sprint prompt (to synthesize customer feedback into design insights) with one based on the WIRE+FRAME prompt framework, with the same data and focusing on the top results:

Original prompt: Read this customer feedback and tell me how we can improve our app for Gen Z users.

Initial ChatGPT Results:

- Improve app reliability to reduce crashes and freezing.

- Provide better guidance or tutorials for financial tools like budgeting or goal setting.

- Enhance the transparency of Zelle transfers by showing confirmation messages.

- Speed up app loading and reduce lag on key actions.

With this version, you’d likely need to go back and forth with follow-up questions, rewrite the output for clarity, and add structure before sharing with your team.

WIRE+FRAME prompt above (with defined role, scope, rules, expected format, tone, flow, and evaluation loop).

Initial ChatGPT Results:

You can clearly see the very different results from the two prompts, both using the exact same data. While the first prompt returns a quick list of ideas, the detailed WIRE+FRAME version doesn’t just summarize feedback but structures it. Themes are clearly labeled, supported by user quotes, mapped to customer journey stages, and prioritized by frequency, severity, and effort.

The structured prompt results can be used as-is or shared without needing to reformat, rewrite, or explain them (see disclaimer below). The first prompt output needs massaging: it’s not detailed, lacks evidence, and would require several rounds of clarification to be actionable. The first prompt may work when the stakes are low and you are exploring. But when your prompt is feeding design, product, or strategy, structure comes to the rescue.

Disclaimer: Know Your Data

A well-structured prompt can make AI output more useful, but it shouldn’t be the final word, or your single source of truth. AI models are powerful pattern predictors, not fact-checkers. If your data is unclear or poorly referenced, even the best prompt may return confident nonsense. Don’t blindly trust what you see. Treat AI like a bright intern: fast, eager, and occasionally delusional. You should always be familiar with your data and validate what AI spits out. For example, in the WIRE+FRAME results above, AI rated the effort as low for financial tool onboarding. That could easily be a medium or high. Good prompting should be backed by good judgment.

Try This Now

Start by using the WIRE+FRAME framework to create a prompt that will help AI augment your work. You could also rewrite the last prompt you were not satisfied with, using the WIRE+FRAME, and compare the output.

Feel free to use this simple tool to guide you through the framework.

Methods: From Lone Prompts to a Prompt System

Just as design systems have reusable components, your prompts can too. You can use the WIRE+FRAME framework to write detailed prompts, but you can also use the structure to create reusable components that are pre-tested, plug-and-play pieces you can assemble to build high-quality prompts faster. Each part of WIRE+FRAME can be transformed into a prompt component: small, reusable modules that reflect your team’s standards, voice, and strategy.

For instance, if you find yourself repeatedly using the same content for different parts of the WIRE+FRAME framework, you could save them as reusable components for you and your team. In the example below, we have two different reusable components for “W: Who & What” — an insights analyst and an information architect.

W: Who & What

- You are a senior UX researcher and customer insights analyst. You specialize in synthesizing qualitative data from diverse sources to identify patterns, surface user pain points, and map them across customer journey stages. Your outputs directly inform product, UX, and service priorities.

- You are an experienced information architect specializing in organizing enterprise content on intranets. Your task is to reorganize the content and features into categories that reflect user goals, reduce cognitive load, and increase findability.

Create and save prompt components and variations for each part of the WIRE+FRAME framework, allowing your team to quickly assemble new prompts by combining components when available, rather than starting from scratch each time.

Behind The Prompts: Questions About Prompting

Q: If I use a prompt framework like WIRE+FRAME every time, will the results be predictable?

A: Yes and no. Yes, your outputs will be guided by a consistent set of instructions (e.g., Rules, Examples, Reference Voice / Style) that will guide the AI to give you a predictable format and style of results. And no, while the framework provides structure, it doesn’t flatten the generative nature of AI, but focuses it on what’s important to you. In the next article, we will look at how you can use this to your advantage to quickly reuse your best repeatable prompts as we build your AI assistant.

Q: Could changes to AI models break the WIRE+FRAME framework?

A: AI models are evolving more rapidly than any other technology we’ve seen before — in fact, ChatGPT was recently updated to GPT-5 to mixed reviews. The update didn’t change the core principles of prompting or the WIRE+FRAME prompt framework. With future releases, some elements of how we write prompts today may change, but the need to communicate clearly with AI won’t. Think of how you delegate work to an intern vs. someone with a few years’ experience: you still need detailed instructions the first time either is doing a task, but the level of detail may change. WIRE+FRAME isn’t built only for today’s models; the components help you clarify your intent, share relevant context, define constraints, and guide tone and format — all timeless elements, no matter how smart the model becomes. The skill of shaping clear, structured interactions with non-human AI systems will remain valuable.

Q: Can prompts be more than text? What about images or sketches?

A: Absolutely. With tools like GPT-5 and other multimodal models, you can upload screenshots, pictures, whiteboard sketches, or wireframes. These visuals become part of your Input Context or help define the Expected Output. The same WIRE+FRAME principles still apply: you’re setting context, tone, and format, just using images and text together. Whether your input is a paragraph or an image and text, you’re still designing the interaction.

Have a prompt-related question of your own? Share it in the comments, and I’ll either respond there or explore it further in the next article in this series.

From Designerly Prompting To Custom Assistants

Good prompts and results don’t come from using others’ prompts, but from writing prompts that are customized for you and your context. The WIRE+FRAME framework helps with that and makes prompting a tool you can use to guide AI models like a creative partner instead of hoping for magic from a one-line request.

Prompting uses the designerly skills you already use every day to collaborate with AI:

- Curiosity to explore what the AI can do and frame better prompts.

- Observation to detect bias or blind spots.

- Empathy to make machine outputs human.

- Critical thinking to verify and refine.

- Experiment & Iteration to learn by doing and improve the interaction over time.

- Growth Mindset to keep up with new technology like AI and prompting.

Once you create and refine prompt components and prompts that work for you, make them reusable by documenting them. But wait, there’s more — what if your best prompts, or the elements of your prompts, could live inside your own AI assistant, available on demand, fluent in your voice, and trained on your context? That’s where we’re headed next.

In the next article, “Design Your Own Design Assistant”, we’ll take what you’ve learned so far and turn it into a Custom AI assistant (aka Custom GPT), a design-savvy, context-aware assistant that works like you do. We’ll walk through that exact build, from defining the assistant’s job description to uploading knowledge, testing, and sharing it with others.

Resources

- GPT-5 Prompting Guide

- GPT-4.1 Prompting Guide

- Anthropic Prompt Engineering

- Prompt Engineering by Google

- Perplexity

- Webapp to guide you through the WIRE+FRAME framework

(yk)

by Admin

Designing For TV: The Evergreen Pattern That Shapes TV Experiences

Designing For TV: The Evergreen Pattern That Shapes TV Experiences Designing For TV: The Evergreen Pattern That Shapes TV Experiences Milan Balać 2025-08-27T13:00:00+00:00 2025-08-27T15:32:36+00:00 Television sets have been the staple of our living rooms for decades. We watch, we interact, and we control, but how […]

Accessibility

Designing For TV: The Evergreen Pattern That Shapes TV Experiences

Milan Balać 2025-08-27T13:00:00+00:00

2025-08-27T15:32:36+00:00

Television sets have been the staple of our living rooms for decades. We watch, we interact, and we control, but how often do we design for them? TV design flew under my “radar” for years, until one day I found myself in the deep, designing TV-specific user interfaces. Now, after gathering quite a bit of experience in the area, I would like to share my knowledge on this rather rare topic. If you’re interested in learning more about the user experience and user interfaces of television, this article should be a good starting point.

Just like any other device or use case, TV has its quirks, specifics, and guiding principles. Before getting started, it will be beneficial to understand the core ins and outs. In Part 1, we’ll start with a bit of history, take a close look at the fundamentals, and review the evolution of television. In Part 2, we’ll dive into the depths of practical aspects of designing for TV, including its key principles and patterns.

Let’s start with the two key paradigms that dictate the process of designing TV interfaces.

Mind The Gap, Or The 10-foot-experience

Firstly, we have the so-called “10-foot experience,” referring to the fact that interaction and consumption on TV happens from a distance of roughly three or more meters. This is significantly different than interacting with a phone or a computer and implies having some specific approaches in the TV user interface design. For example, we’ll need to make text and user interface (UI) elements larger on TV to account for the bigger distance to the screen.

Furthermore, we’ll take extra care to adhere to contrast standards, primarily relying on dark interfaces, as light ones may be too blinding in darker surroundings. And finally, considering the laid-back nature of the device, we’ll simplify the interactions.

But the 10-foot experience is only one part of the equation. There wouldn’t be a “10-foot experience” in the first place if there were no mediator between the user and the device, and if we didn’t have something to interact through from a distance.

There would be no 10-foot experience if there were no remote controllers.

The Mediator

The remote, the second half of the equation, is what allows us to interact with the TV from the comfort of the couch. Slower and more deliberate, this conglomerate of buttons lacks the fluid motion of a mouse, or the dexterity of fingers against a touchscreen — yet the capabilities of the remote should not be underestimated.

Rudimentary as it is and with a limited set of functions, the remote allows for some interesting design approaches and can carry the weight of the modern TV along with its ever-growing requirements for interactivity. It underwent a handful of overhauls during the seventy years since its inception and was refined and made more ergonomic; however, there is a 40-year-old pattern so deeply ingrained in its foundation that nothing can change it.

What if I told you that you could navigate TV interfaces and apps with a basic controller from the 1980s just as well as with the latest remote from Apple? Not only that, but any experience built around the six core buttons of a remote will be system-agnostic and will easily translate across platforms.

This is the main point I will focus on for the rest of this article.

Birth Of A Pattern

As television sets were taking over people’s living rooms in the 1950s, manufacturers sought to upgrade and improve the user experience. The effort of walking up to the device to manually adjust some settings was eventually identified as an area for improvement, and as a result, the first television remote controllers were introduced to the market.

Early Developments

Preliminary iterations of the remotes were rather unique, and it took some divergence before we finally settled on a rectangular shape and sprinkled buttons on top.

Take a look at the Zenith Flash-Matic, for example. Designed in the mid-1950s, this standout device featured a single button that triggered a directional lamp; by pointing it at specific corners of the TV set, viewers could control various functions, such as changing channels or adjusting the volume.

While they were a far cry compared to their modern counterparts, devices like the Flash-Matic set the scene for further developments, and we were off to the races!

As the designs evolved, the core functionality of the remote solidified. Gradually, remote controls became more than just simple channel changers, evolving into command centers for the expanding territory of home entertainment.

Note: I will not go too much into history here — aside from some specific points that are of importance to the matter at hand — but if you have some time to spare, do look into the developmental history of television sets and remotes, it’s quite a fascinating topic.

However, practical as they may have been, they were still considered a luxury, significantly increasing the prices of TV sets. As the 1970s were coming to a close, only around 17% of United States households had a remote controller for their TVs. Yet, things would change as the new decade rolled in.

Button Mania Of The 1980s

The eighties brought with them the Apple Macintosh, MTV, and Star Wars. It was a time of cultural shifts and technological innovation. Videocassette recorders (VCRs) and a multitude of other consumer electronics found their place in the living rooms of the world, along with TVs.

These new devices, while enriching our media experiences, also introduced a few new design problems. Where there was once a single remote, now there were multiple remotes, and things were getting slowly out of hand.

This marked the advent of universal remotes.

Trying to hit many targets with one stone, the unwieldy universal remotes were humanity’s best solution for controlling a wider array of devices. And they did solve some of these problems, albeit in an awkward way. The complexity of universal remotes was a trade-off for versatility, allowing them to be programmed and used as a command center for controlling multiple devices. This meant transforming the relatively simple design of their predecessors into a beehive of buttons, prioritizing broader compatibility over elegance.

On the other hand, almost as a response to the inconvenience of the universal remote, a different type of controller was conceived in the 1980s — one with a very basic layout and set of buttons, and which would leave its mark in both how we interact with the TV, and how our remotes are laid out. A device that would, knowingly or not, give birth to a navigational pattern that is yet to be broken — the NES controller.

D-pad Dominance

Released in 1985, the Nintendo Entertainment System (NES) was an instant hit. Having sold sixty million units around the world, it left an undeniable mark on the gaming console industry.

The NES controller (which was not truly remote, as it ran a cable to the central unit) introduced the world to a deceptively simple control scheme. Consisting of six primary actions, it gave us the directional pad (the D-pad), along with two action buttons (A and B). Made in response to the bulky joystick, the cross-shaped cluster allowed for easy movement along two axes (up, down, left, and right).

Charmingly intuitive, this navigational pattern would produce countless hours of gaming fun, but more importantly, its elementary design would “seep over” into the wider industry — the D-pad, along with the two action buttons, would become the very basis on which future remotes would be constructed.

The world continued spinning madly on, and what was once a luxury became commonplace. By the end of the decade, TV remotes were more integral to the standard television experience, and more than two-thirds of American TV owners had some sort of a remote.

The nineties rolled in with further technological advancements. TV sets became more robust, allowing for finer tuning of their settings. This meant creating interfaces through which such tasks could be accomplished, and along with their master sets, remotes got updated as well.

Gone were the bulky rectangular behemoths of the eighties. As ergonomics took precedence, they got replaced by comfortably contoured devices that better fit their users’ hands. Once conglomerations of dozens of uniform buttons, these contemporary remotes introduced different shapes and sizes, allowing for recognition simply through touch. Commands were being clustered into sensible groups along the body of the remote, and within those button groups, a familiar shape started to emerge.

Gradually, the D-pad found its spot on our TV remotes. As the evolution of these devices progressed, it became even more deeply embedded at the core of their interactivity.

Set-top boxes and smart features emerged in the 2000s and 2010s, and TV technology continued to advance. Along the way, many bells and whistles were introduced. TVs got bigger, brighter, thinner, yet their essence remained unchanged.

In the years since their inception, remotes were innovated upon, but all the undertakings circle back to the core principles of the NES controller. Future endeavours never managed to replace, but only to augment and reinforce the pattern.

The Evergreen Pattern

In 2013, LG introduced their Magic remote (“So magically simple, the kids will be showing you how to use it!”). This uniquely shaped device enabled motion controls on LG TV sets, allowing users to point and click similar to a computer mouse. Having a pointer on the screen allowed for much more flexibility and speed within the system, and the remote was well-received and praised as one of the best smart TV remotes.

Innovating on tradition, this device introduced new features and fresh perspectives to the world of TV. But if we look at the device itself, we’ll see that, despite its differences, it still retains the D-pad as a means of interaction. It may be argued that LG never set out to replace the directional pad, and as it stands, regardless of their intent, they only managed to augment it.

For an even better example, let’s examine Apple TV’s second-generation remotes (the first-generation Siri remote). Being the industry disruptors, Apple introduced a touchpad to the top half of the remote. The glass surface provided briskness and precision to the experience, enabling multi-touch gestures, swipe navigation, and quick scrolling. This quality of life upgrade was most noticeable when typing with the horizontal on-screen keyboards, as it allowed for smoother and quicker scrolling from A to Z, making for a more refined experience.

While at first glance it may seem Apple removed the directional buttons, the fact is that the touchpad is simply a modernised take on the pattern, still abiding by the same four directions a classic D-pad does. You could say it’s a D-pad with an extra layer of gimmick.

Furthermore, the touchpad didn’t really sit well with the user base, along with the fact that the remote’s ergonomics were a bit iffy. So instead of pushing the boundaries even further with their third generation of remotes, Apple did a complete 180, re-introducing the classic D-pad cluster while keeping the touch capabilities from the previous generation (the touch-enabled clickpad lets you select titles, swipe through playlists, and use a circular gesture on the outer ring to find just the scene you’re looking for).

Now, why can’t we figure out a better way to navigate TVs? Does that mean we shouldn’t try to innovate?

We can argue that using motion controls and gestures is an obvious upgrade to interacting with a TV. And we’d be right… in principle. These added features are more complex and costly to produce, but more importantly, while it has been upgraded with bits and bobs, the TV is essentially a legacy system. And it’s not only that.

While touch controls are a staple of interaction these days, adding them without thorough consideration can reduce the usability of a remote.

“

Pitfalls Of Touch Controls

Modern car dashboards are increasingly being dominated by touchscreens. While they may impress at auto shows, their real-world usability is often compromised.

Driving demands constant focus and the ability to adapt and respond to ever-changing conditions. Any interface that requires taking your eyes off the road for more than a moment increases the risk of accidents. That’s exactly where touch controls fall short. While they may be more practical (and likely cheaper) for manufacturers to implement, they’re often the opposite for the end user.

Unlike physical buttons, knobs, and levers, which offer tactile landmarks and feedback, touch interfaces lack the ability to be used by feeling alone. Even simple tasks like adjusting the volume of the radio or the climate controls often involve gestures and nested menus, all performed on a smooth glass surface that demands visual attention, especially when fine-tuning.

Fortunately, the upcoming 2026 Euro NCAP regulations will encourage car manufacturers to reintroduce physical controls for core functions, reducing driver distraction and promoting safer interaction.

Similarly (though far less critically), sleek, buttonless TV remote controls may feel modern, but they introduce unnecessary abstraction to a familiar set of controls.

Physical buttons with distinct shapes and positioning allow users to navigate by memory and touch, even in the dark. That’s not outdated — it’s a deeper layer of usability that modern design should respect, not discard.

“

And this is precisely why Apple reworked the Apple TV third-generation remote the way it is now, where the touch area at the top disappeared. Instead, the D-pad again had clearly defined buttons, and at the same time, the D-pad could also be extended (not replaced) to accept some touch gestures.

The Legacy Of TV

Let’s take a look at an old on-screen keyboard.

The Legend of Zelda, released in 1986, allowed players to register their names in-game. There are even older games with the same feature, but that’s beside the point. Using the NES controller, the players would move around the keyboard, entering their moniker character by character. Now let’s take a look at a modern iteration of the on-screen keyboard.

Notice the difference? Or, to phrase it better: do you notice the similarities? Throughout the years, we’ve introduced quality of life improvements, but the core is exactly the same as it was forty years ago. And it is not the lack of innovation or bad remotes that keep TV deeply ingrained in its beginnings. It’s simply that it’s the most optimal way to interact given the circumstances.

Laying It All Out

Just like phones and computers, TV layouts are based on a grid system. However, this system is a lot more apparent and rudimentary on TV. Taking a look at a standard TV interface, we’ll see that it consists mainly of horizontal and vertical lists, also known as shelves.

These grids may be populated with cards, characters of the alphabet, or anything else, essentially, and upon closer examination, we’ll notice that our movement is restricted by a few factors:

- There is no pointer for our eyes to follow, like there would be on a computer.

- There is no way to interact directly with the display like we would with a touchscreen.

For the purposes of navigating with a remote, a focus state is introduced. This means that an element will always be highlighted for our eyes to anchor, and it will be the starting point for any subsequent movement within the interface.

Moreover, starting from the focused element, we can notice that the movement is restricted to one item at a time, almost like skipping stones. Navigating linearly in such a manner, if we wanted to move within a list of elements from element #1 to element #5, we’d have to press a directional button four times.

To successfully navigate such an interface, we need the ability to move left, right, up, and down — we need a D-pad. And once we’ve landed on our desired item, there needs to be a way to select it or make a confirmation, and in the case of a mistake, we need to be able to go back. For the purposes of those two additional interactions, we’d need two more buttons, OK and back, or to make it more abstract, we’d need buttons A and B.

So, to successfully navigate a TV interface, we need only a NES controller.

Yes, we can enhance it with touchpads and motion gestures, augment it with voice controls, but this unshakeable foundation of interaction will remain as the very basic level of inherent complexity in a TV interface. Reducing it any further would significantly impair the experience, so all we’ve managed to do throughout the years is to only build upon it.

The D-pad and buttons A and B survived decades of innovation and technological shifts, and chances are they’ll survive many more. By understanding and respecting this principle, you can design intuitive, system-agnostic experiences and easily translate them across platforms. Knowing you can’t go simpler than these six buttons, you’ll easily build from the ground up and attach any additional framework-bound functionality to the time-tested core.

And once you get the grip of these paradigms, you’ll get into mapping and re-mapping buttons depending on context, and understand just how far you can go when designing for TV. You’ll be able to invent new experiences, conduct experiments, and challenge the patterns. But that is a topic for a different article.

Closing Thoughts

While designing for TV almost exclusively during the past few years, I was also often educating the stakeholders on the very principles outlined in this article. Trying to address their concerns about different remotes working slightly differently, I found respite in the simplicity of the NES controller and how it got the point across in an understandable way. Eventually, I expanded my knowledge by looking into the developmental history of the remote and was surprised that my analogy had backing in history. This is a fascinating niche, and there’s a lot more to share on the topic. I’m glad we started!

It’s vital to understand the fundamental “ins” and “outs” of any venture before getting practical, and TV is no different. Now that you understand the basics, go, dig in, and break some ground.

Having covered the underlying interaction patterns of TV experiences in detail, it’s time to get practical.

In Part 2, we’ll explore the building blocks of the 10-foot experience and how to best utilize them in your designs. We’ll review the TV design fundamentals (the screen, layout, typography, color, and focus/focus styles), and the common TV UI components (menus, “shelves,” spotlights, search, and more). I will also show you how to start thinking beyond the basics and to work with — and around — the constraints which we abide by when designing for TV. Stay tuned!

Further Reading

- “The 10 Foot Experience,” by Robert Stulle (Edenspiekermann)

Every user interface should offer effortless navigation and control. For the 10-foot experience, this is twice as important; with only up, down, left, right, OK and back as your input vocabulary, things had better be crystal clear. You want to sit back and enjoy without having to look at your remote — your thumb should fly over the buttons to navigate, select, and activate. - “Introduction to the 10-Foot Experience for Windows Game Developers” (Microsoft Learn)

A growing number of people are using their personal computers in a completely new way. When you think of typical interaction with a Windows-based computer, you probably envision sitting at a desk with a monitor, and using a mouse and keyboard (or perhaps a joystick device); this is referred to as the 2-foot experience. But there’s another trend which you’ll probably start hearing more about: the 10-foot experience, which describes using your computer as an entertainment device with output to a TV. This article introduces the 10-foot experience and explores the list of things that you should consider first about this new interaction pattern, even if you aren’t expecting your game to be played this way. - “10-foot user interface” (Wikipedia)

In computing, a 10-foot user interface, or 3-meter UI, is a graphical user interface designed for televisions (TV). Compared to desktop computer and smartphone user interfaces, it uses text and other interface elements that are much larger in order to accommodate a typical television viewing distance of 10 feet (3.0 meters); in reality, this distance varies greatly between households, and additionally, the limitations of a television’s remote control necessitate extra user experience considerations to minimize user effort. - “The Television Remote Control: A Brief History,” by Mary Bellis (ThoughtCo)

The first TV remote, the Lazy Bone, was made in 1950 and used a cable. In 1955, the Flash-matic was the first wireless remote, but it had issues with sunlight. Zenith’s Space Command in 1956 used ultrasound and became the popular choice for over 25 years. - “The History of The TV Remote,” by Remy Millisky (Grunge)

The first person to create and patent the remote control was none other than Nikola Tesla, inventor of the Tesla coil and numerous electronic systems. He patented the idea in 1893 to drive boats remotely, far before televisions were invented. Since then, remotes have come a long way, especially for the television, changing from small boxes with long wires to the wireless universal remotes that many people have today. How has the remote evolved over time? - “Nintendo Entertainment System controller” (Nintendo Wiki)

The Nintendo Entertainment System controller is the main controller for the NES. While previous systems had used joysticks, the NES controller provided a directional pad (the D-pad was introduced in the Game & Watch version of Donkey Kong). - “Why Touchscreens In Cars Don’t Work,” by Jacky Li (published in June 2018)

Observing the behaviour of 21 drivers has made me realize what’s wrong with automotive UX. […] While I was excited to learn more about the Tesla Model X, it slowly became apparent to me that the driver’s eyes were more glued to the screen than the road. Something about interacting with a touchscreen when driving made me curious to know: just how distracting are they? - “Europe Is Requiring Physical Buttons For Cars To Get Top Safety Marks,” by Jason Torchinsky (published in March 2024)